UPDATE (9 Nov 2014): For those interested in setting up their own scientific twitterbot, see Rob Lanfear’s excellent and easy-to-follow instructions here. Peter Carlton has also outlined another method for setting up a twitterbot here, as has Sho Iwamoto here.

UPDATE (20 Dec 2022): The @fly_papers twitterbot has been permanently suspended due to changes in Twitter’s terms of service. An alternative @flypapers bot is now alive on Mastadon.

A year ago I created a simple “twitterbot” to stay on top of the Drosophila literature called FlyPapers, which tweets links to new abstracts in Pubmed and preprints in arXiv from a dedicated twitter account (@fly_papers). While most ‘bots on Twitter post spam or creative nonsense, an increasing number of people are exploring the use of twitterbots for more productive academic purposes. For example, Rod Page set up the @evoldir twitterbot way back in 2009 as an alternative to receiving email posts to the Evoldir mailing list, and likewise Gordon McNickle developed the @EcoLog_L twitterbot for the Ecolog-L mailing list. Similar to FlyPapers, others have established twitterbots for domain-specific literature feeds, such as the @BioPapers for Quantitative Biology preprints on arXiv, @EcoEvoJournals for publications in the areas of Ecology & Evolution and @PlantEcologyBot for papers on Plant Ecology. More recently, Alberto Acerbi developed the @CultEvoBot to post links to blogs and new articles on the topic of cultural evolution. (I recommend reading posts by Rod, Gordon and Alberto for further insight into how and why they established these twitterbots.) One year in, I thought I’d summarize my thoughts on the FlyPapers experiment, and to make good on a promise I made to describe my set-up in case others are interested.

First, a few words on my motivation for creating FlyPapers. I have been receiving a daily update of all papers in the area of Drosophila in one form or another for nearly 10 years. My philosophy is that it is relatively easy to keep up on a daily basis with what is being published, but it’s virtually impossible to catch up when you let the river of information flow for too long. I first started receiving daily email updates from NCBI, which cluttered up my inbox and often got buried. Then I migrated to using RSS on Google Reader, which led to a similar problem of many unread posts accumulating that needed to be marked as “read”. Ultimately, I realized what I want from a personalized publication feed — a flow of links to articles that can be quickly scanned and clicked, but which requires no other action and can be ignored when I’m busy — was better suited to a Twitter client than a RSS reader. Moreover, in the spirit of “maximizing the value of your keystrokes“, it seemed that a feed that was useful for me might also be useful for others, and that Twitter was the natural medium to try sharing this feed since many scientists are already using twitter to post links to papers. Thus FlyPapers was born.

Setting up FlyPapers was straightforward and required no specialist know-how. I first created a dedicated Twitter account with a “catchy” name. Next, I created an account with dlvr.it, which takes a RSS/Twitter/email feed as input and routes the output to the FlyPapers Twitter account. I then set up an RSS feed from NCBI based on a search for the term “Drosophila” and add this as a source to the dlvr.it route. Shortly thereafter, I added a RSS feed for preprints in Arxiv using the search term “Drosophila” and added this to the same dlvr.it route. (Unfortunately, neither PeerJ Preprints nor bioRxiv currently have the ability to set up custom RSS feeds, and thus are not included in the FlyPapers stream.) NCBI and Arxiv only push new articles once a day, and each article is posted automatically as a distinct tweet for ease of viewing, bookmarking and sharing. The only gotcha I experienced in setting the system up was making sure when creating the Pubmed RSS feed to set the “number of items displayed” high enough (=100). If the number of articles posted in one RSS update exceeds the limit you set when you create the Pubmed RSS feed, Pubmed will post a URL to a Pubmed query for the entire set of papers as one RSS item, rather than post links to each individual paper. (For Gordon’s take on how he set up his Twitterbots, see this thread.) [UPDATE 25/2/14: Rob Lanfear has posted detailed instructions for setting up a twitterbot using the strategy I describe above at https://github.com/roblanf/phypapers. See his comment below for more information.]

So, has the experiment worked? Personally, I am finding FlyPapers a much more convenient way to stay on top of the Drosophila literature than any previous method I have used. Apparently others are finding this feed useful as well.

https://twitter.com/StuartJFGrice/status/429362778642456576

One year in, FlyPapers now has 333 followers in 16 countries, which is a far bigger and wider following than I would have ever imagined. Some of the followers are researchers I am familiar with in the Drosophila world, but most are students or post-docs I don’t know, which suggests the feed is finding relevant target audiences via natural processes on Twitter. The account has now posted 3,877 tweets, or ~10-11 tweets per day on average, which gives a rough scale for the amount of research being published annually on Drosophila. Around 10% of tweeted papers are getting retweeted (n=386) or favorited (n=444) by at least one person, and the breadth of topics being favorited/retweeted spans virtually all of Drosophila biology. These facts suggest that developing a twitterbot for domain-specific literature can indeed attract substantial numbers of like-minded individuals, and that automatically tweeting links to articles enables a significant proportion of papers in a field to easily be seen, bookmarked and shared.

Overall, I’m very pleased with the way FlyPapers is developing. I had hoped that one of the outcomes of this experiment would be to help promote Drosophila research, and this appears to be working. I had not expected it would act as a general hub for attracting Drosophila researchers who are active on Twitter, which is a nice surprise. One issue I hadn’t considered a year ago was the potential that ‘bots like FlyPapers might have to “game” Altmetics scores. Frankly, any metric that would be so easily gamed by a primitive bot like FlyPapers probably has no real intrisic value. However, it is true that this bot does add +1 to the twitter count for all Drosophila papers. My thoughts on this are that any attempt to correct the potential influence of ‘bots on Altmetrics scores should unduly not penalize the real human engagement bots can facilitate, so I’d say it is fair to -1 the orginal FlyPapers tweets in an Altmetrics calculation, but retain the retweets created by humans.

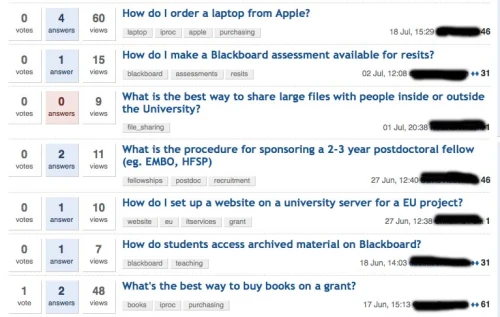

One final consequence of putting all new Drosophila literature onto Twitter that I would not have anticipated is that some tweets have been picked up by other social media outlets, including disease-advocacy accounts that quickly pushed basic research findings out to their target audience:

This final point suggests that there may be wider impacts from having more research articles automatically injected into the Twitter ecosystem. Maybe those pesky twitterbots aren’t always so bad after all.

RELATED POSTS: